The Hard Problem

The Hard Problem of Consciousness

The hard problem of consciousness is the problem of explaining why any physical state is conscious rather than nonconscious. It is the problem of explaining why there is “something it is like” for a subject in conscious experience, why conscious mental states “light up” and directly appear to the subject. The usual methods of science involve explanation of functional, dynamical, and structural properties—explanation of what a thing does, how it changes over time, and how it is put together. But even after we have explained the functional, dynamical, and structural properties of the conscious mind, we can still meaningfully ask the question, Why is it conscious? This suggests that an explanation of consciousness will have to go beyond the usual methods of science. Consciousness therefore presents a hard problem for science, or perhaps it marks the limits of what science can explain. Explaining why consciousness occurs at all can be contrasted with so-called “easy problems” of consciousness: the problems of explaining the function, dynamics, and structure of consciousness. These features can be explained using the usual methods of science. But that leaves the question of why there is something it is like for the subject when these functions, dynamics, and structures are present. This is the hard problem.

In more detail, the challenge arises because it does not seem that the qualitative and subjective aspects of conscious experience—how consciousness “feels” and the fact that it is directly “for me”—fit into a physicalist ontology, one consisting of just the basic elements of physics plus structural, dynamical, and functional combinations of those basic elements. It appears that even a complete specification of a creature in physical terms leaves unanswered the question of whether or not the creature is conscious. And it seems that we can easily conceive of creatures just like us physically and functionally that nonetheless lack consciousness. This indicates that a physical explanation of consciousness is fundamentally incomplete: it leaves out what it is like to be the subject, for the subject. There seems to be an unbridgeable explanatory gap between the physical world and consciousness. All these factors make the hard problem hard.

The hard problem was so-named by David Chalmers in 1995. The problem is a major focus of research in contemporary philosophy of mind, and there is a considerable body of empirical research in psychology, neuroscience, and even quantum physics. The problem touches on issues in ontology, on the nature and limits of scientific explanation, and on the accuracy and scope of introspection and first-person knowledge, to name but a few. Reactions to the hard problem range from an outright denial of the issue to naturalistic reduction to panpsychism (the claim that everything is conscious to some degree) to full-blown mind-body dualism.

Contents.

1. Stating the Problem

a. Chalmers.

b. Nagel

c. Levine.

2. Underlying Reasons for the Problem.

3. Responses to the Problem.

a. Eliminativism.

b. Strong Reductionism.

c. Weak Reductionism.

d. Mysterianism.

e. Interactionist Dualism.

f. Epiphenomenalism.

g. Dual Aspect Theory/Neutral Monism/Panpsychism.

4. References and Further Reading.

a. Chalmers

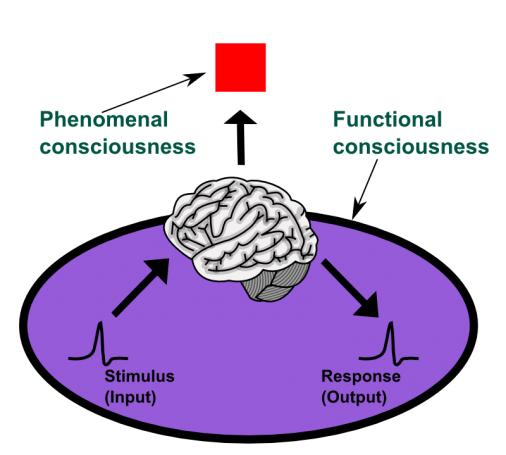

David Chalmers coined the name “hard problem” (1995, 1996), but the problem is not wholly new, being a key element of the venerable mind-body problem. Still, Chalmers is among those most responsible for the outpouring of work on this issue. The problem arises because “phenomenal consciousness,” consciousness characterized in terms of “what it’s like for the subject,” fails to succumb to the standard sort of functional explanation successful elsewhere in psychology (compare Block 1995). Psychological phenomena like learning, reasoning, and remembering can all be explained in terms of playing the right “functional role.” If a system does the right thing, if it alters behavior appropriately in response to environmental stimulation, it counts as learning. Specifying these functions tells us what learning is and allows us to see how brain processes could play this role. But according to Chalmers,

What makes the hard problem hard and almost unique is that it goes beyond problems about the performance of functions. To see this, note that even when we have explained the performance of all the cognitive and behavioral functions in the vicinity of experience—perceptual discrimination, categorization, internal access, verbal report—there may still remain a further unanswered question: Why is the performance of these functions accompanied by experience? (1995, 202, emphasis in original).

Chalmers explains the persistence of this question by arguing against the possibility of a “reductive explanation” for phenomenal consciousness (hereafter, I will generally just use the term ‘consciousness’ for the phenomenon causing the problem). A reductive explanation in Chalmers’s sense (following David Lewis (1972)), provides a form of deductive argument concluding with an identity statement between the target explanandum (the thing we are trying to explain) and a lower-level phenomenon that is physical in nature or more obviously reducible to the physical. Reductive explanations of this type have two premises. The first presents a functional analysis of the target phenomenon, which fully characterizes the target in terms of its functional role. The second presents an empirically-discovered realizer of the functionally characterized target, one playing that very functional role. Then, by transitivity of identity, the target and realizer are deduced to be identical. For example, the gene may be reductively explained in terms of DNA as follows:

- The gene = the unit of hereditary transmission. (By analysis.)

- Regions of DNA = the unit of hereditary transmission. (By empirical investigation.)

- Therefore, the gene = regions of DNA. (By transitivity of identity, 1, 2.)

Chalmers contends that such reductive explanations are available in principle for all other natural phenomena, but not for consciousness. This is the hard problem.

The reason that reductive explanation fails for consciousness, according to Chalmers, is that it cannot be functionally analyzed. This is demonstrated by the continued conceivability of what Chalmers terms “zombies”—creatures physically (and so functionally) identical to us, but lacking consciousness—even in the face of a range of proffered functional analyses. If we had a satisfying functional analysis of consciousness, zombies should not be conceivable. The lack of a functional analysis is also shown by the continued conceivability of spectrum inversion (perhaps what it looks like for me to see green is what it looks like when you see red), the persistence of the “other minds” problem, the plausibility of the “knowledge argument” (Jackson 1982) and the manifest implausibility of offered functional characterizations. If consciousness really could be functionally characterized, these problems would disappear. Since they retain their grip on philosophers, scientists, and lay-people alike, we can conclude that no functional characterization is available. But then the first premise of a reductive explanation cannot be properly formulated, and reductive explanation fails. We are left, Chalmers claims, with the following stark choice: either eliminate consciousness (deny that it exists at all) or add consciousness to our ontology as an unreduced feature of reality, on par with gravity and electromagnetism. Either way, we are faced with a special ontological problem, one that resists solution by the usual reductive methods.

b. Nagel

Thomas Nagel sees the problem as turning on the “subjectivity” of conscious mental states (1974, 1986). He argues that the facts about conscious states are inherently subjective—they can only be fully grasped from limited types of viewpoints. However, scientific explanation demands an objective characterization of the facts, one that moves away from any particular point of view. Thus, the facts about consciousness elude science and so make “the mind-body problem really intractable” (Nagel 1974, 435).

Nagel argues for the inherent subjectivity of the facts about consciousness by reflecting on the question of what it is like to be a bat—for the bat. It seems that no amount of objective data will provide us with this knowledge, given that we do not share its type of point of view (the point of view of a creature able to fly and echolocate). Learning all we can about the brain mechanisms, biochemistry, evolutionary history, psychophysics, and so forth, of a bat still leaves us unable to discover (or even imagine) what it’s like for the bat to hunt by echolocation on a dark night. But it is still plausible that there are facts about what it’s like to be a bat, facts about how things seem from the bat’s perspective. And even though we may have good reason to believe that consciousness is a physical phenomenon (due to considerations of mental causation, the success of materialist science, and so on), we are left in the dark about the bat’s conscious experience. This is the hard problem of consciousness.

c. Levine

Joseph Levine argues that there is a special “explanatory gap” between consciousness and the physical (1983, 1993, 2001). The challenge of closing this explanatory gap is the hard problem. Levine argues that a good scientific explanation ought to deductively entail what it explains, allowing us to infer the presence of the target phenomenon from a statement of laws or mechanisms and initial conditions (Levine 2001, 74-76). Deductive entailment is a logical relation where if the premises of an argument are true, the conclusion must be true as well. For example, once we discover that lightning is nothing more than an electrical discharge, knowing that the proper conditions for a relevantly large electrical discharge existed in the atmosphere at time t allows us to deduce that lightning must have occurred at time t. If such a deduction is not possible, there are three possible reasons, according to Levine. One is that we have not fully specified the laws or mechanisms cited in our explanation. Two is that the target phenomenon is stochastic in nature, and the best that can be inferred is a conclusion about the probability of the occurrence of the explanatory target. The third is that there are as yet unknown factors at least partially involved in determining the phenomenon in question. If we have adequately specified the laws and mechanisms in question, and if we have adjusted for stochastic phenomena, then we should possess a deductive conclusion about our explanatory target, or the third possibility is in effect. But the third possibility is “precisely an admission that we don't have an adequate explanation” (2001, 76).

And this is the case with consciousness, according to Levine. No matter how detailed our specification of brain mechanisms or physical laws, it seems that there is an open question about whether consciousness is present. We can still meaningfully ask if consciousness occurred, even if we accept that the laws, mechanisms, and proper conditions are in place. And it seems that any further information of this type that we add to our explanation will still suffer from the same problem. Thus, there is an explanatory gap between the physical and consciousness, leaving us with the hard problem.

2. Underlying Reasons for the Problem

But what it is about consciousness that generates the hard problem? It may just seem obvious that consciousness could not be physical or functional. But it is worthwhile to try and draw a rough circle around the problematic features of conscious experience, if we can. This both clarifies what we are talking about when we talk about consciousness and helps isolate the data a successful theory must explain.

Uriah Kriegel (2009; see also Levine 2001) offers a helpful conceptual division of consciousness into two components. Starting with the standard understanding of conscious states as states there is something it’s like for the organism to be in, Kriegel notes that we can either focus on the fact that something appears for the organism or we can focus on what it is that appears, the something it’s like. Focusing on the former, we find that subjects are aware of their conscious states in a distinctive way. Kriegel labels this feature the subjective component of consciousness. Focusing on the latter we find the experienced character of consciousness—the “redness of red” or the painfulness of pain— often termed “qualia” or “phenomenal character” in the literature (compare Crane 2000). Kriegel labels this the qualitative component of consciousness.

Subdividing consciousness in this way allows us to concentrate on how we are conscious and whatwe are conscious of. When we focus on the subjective “how” component, we find that conscious states are presented to the subject in a seemingly immediate way. And when we focus on the qualitative “what” component, we find that consciousness presents us with seemingly indescribable qualities which in principle can vary independently of mental functioning. These features help explain why consciousness generates the hard problem.

The first feature, which we can call immediacy, concerns the way we access consciousness from the first-person perspective. Conscious states are accessed in a seemingly unmediated way. It appears that nothing comes between us and our conscious states. We seem to access them simply by having them—we do not infer their presence by way of any evidence or argument. This immediacy creates the impression that there is no way we could be wrong about the content of our conscious states. Error in perception or error in reasoning can be traced back to poor perceptual conditions or to a failure of rational inference. But in the absence of such accessible sources of error, it seems that there is no room for inaccuracy in the introspective case. And even if we come to believe we are in error in introspection, the evidence for this will be indirect and third-personal—it will lack the subjective force of immediacy. Thus, there is an intuition of special accuracy or even infallibility when it comes to knowing our own conscious states. We might be wrong that an object in the world is really red, but can we be wrong that it seems red to us? But if we cannot be wrong about how things seem to us and conscious states seem inexplicable, then they really areinexplicable. In this way, the immediacy of the subjective component of consciousness underwrites the hard problem.

But what we access may be even more problematic than how we access it: we might, after all, have had immediate access to the physical nature of our conscious states (see P.M. Churchland 1985). But conscious experience instead reveals various sensory qualities—the redness of the visual experience of an apple or the painfulness of a stubbed toe, for example. But these qualities seem to defy informative description. If one has not experienced them, then no amount of description will adequately convey what it’s like to have such an experience with these qualities. We can call this feature of the qualitative component of consciousness indescribability. If someone has never seen red (a congenitally blind person, for example), it seems there is nothing informative we could say to convey to them the true nature of this quality. We might mention prototypical red objects or explain that red is more similar to purple than it is to green, but such descriptions seem to leave the quality itself untouched. And if experienced qualities cannot be informatively described, how could they be adequately captured in an explanatory theory? It seems that by their very nature, conscious qualities defy explanation. This difficulty lies at the heart of the hard problem.

A further problematic feature of what we access is that we can easily imagine our conscious mental processes occurring in conjunction with different conscious qualities or in the absence of consciousness altogether. The particular qualities that accompany specific mental operations—like the reddish quality accompanying our detection and categorization of an apple, say—seem only contingently connected to the functional processes involved in detection and categorization. We can call this feature of what is accessed independence. Independence is the apparent lack of connection between conscious qualities and anything else, and it underwrites the inverted and absent qualia thought experiments used by Chalmers to establish the hard problem (compare Block 1980). If conscious qualities really are independent in this way, then there seems to be no way to effectively tie them to the rest of reality.

The challenge of the hard problem, then, is to explain consciousness given that it seems to give us immediate access to indescribable and independent qualities. If we can explain these underlying features, then we may see how to fit consciousness into a physicalist ontology. Or it perhaps taking these features seriously motivates a rejection of physicalism and the acceptance of conscious qualities as fundamental features of our ontology. The following section briefly surveys the range of responses to the hard problem, from eliminativism and reductionism to panpsychism and full-blown dualism.

a. Eliminativism

Eliminativism holds that there is no hard problem of consciousness because there is no consciousness to worry about in the first place. Eliminativism is most clearly defended by Rey 1997, but see also Dennett 1978, 1988, Wilkes 1984, and Ryle 1949. On the face of it, this response sounds absurd: how can one deny that conscious experience exists? Consciousness might be the one thing that is certain in our epistemology. But eliminativist views resist the idea that what we call experience is equivalent to consciousness, at least in the phenomenal, “what it’s like” sense. They hold that consciousness so-conceived is a philosopher’s construction, one that can be rejected without absurdity. If it is definitional of consciousness that it is nonfunctional, then holding that the mind is fully functional amounts to a denial of consciousness. Alternately, if qualia are construed as nonrelational, intrinsic qualities of experience, then one might deny that qualia exist (Dennett 1988). And if qualia are essential to consciousness, this, too, amounts to an eliminativism about consciousness.

What might justify consciousness eliminativism? First, the very notion of consciousness, upon close examination, may not have well-defined conditions of application—there may be no single phenomenon that the term picks out (Wilkes 1984). Or the term may serve no use at all in any scientific theory, and so may drop out of a scientifically-fixed ontology (Rey 1997). If science tells us what there is (as some naturalists hold), and science has no place for nonfunctional intrinsic qualities, then there is no consciousness, so defined. Finally, it might be that the term ‘consciousness’ gets its meaning as part of a falsifiable theory, our folk psychology. The entities posited by a theory stand or fall with the success of the theory. If the theory is falsified, then the entities it posits do not exist (compare P.M. Churchland 1981). And there is no guarantee that folk psychology will not be supplanted by a better theory of the mind, perhaps a neuroscientific or even quantum mechanical theory, at some point. Thus, consciousness might be eliminated from our ontology. If that occurs, obviously there is no hard problem to worry about. No consciousness, no problem!

But eliminativism seems much too strong a reaction to the hard problem, one that throws the baby out with the bathwater. First, it is highly counterintuitive to deny that consciousness exists. It seems extremely basic to our conception of minds and persons. A more desirable view would avoid this move. Second, it is not clear why we must accept that consciousness, by definition, is nonfunctional or intrinsic. Definitional, “analytic” claims are highly controversial at best, particularly with difficult terms like ‘consciousness’ (compare Quine 1951, Wittgenstein 1953). A better solution would hold that consciousness still exists, but it is functional and relational in nature. This is the strong reductionist approach.

b. Strong Reductionism

Strong reductionism holds that consciousness exists, but contends that it is reducible to tractable functional, nonintrinsic properties. Strong reductionism further claims that the reductive story we tell about consciousness fully explains, without remainder, all that needs to be explained about consciousness. Reductionism, generally, is the idea that complex phenomena can be explained in terms of the arrangement and functioning of simpler, better understood parts. Key to strong reductionism, then, is the idea that consciousness can be broken down and explained in terms of simpler things. This amounts to a rejection of the idea that experience is simple and basic, that it stands as a kind of epistemic or metaphysical “ground floor.” Strong reductionists must hold that consciousness is not as it prima facie appears, that it only seems to be marked by immediacy, indescribability, and independence and therefore that it only seems nonfunctional and intrinsic. Consciousness, according to strong reductionism, can be fully analyzed and explained in functional terms, even if it does not seem that way.

A number of prominent strongly reductive theories exist in the literature. Functionalist approaches hold that consciousness is nothing more than a functional process. A popular version of this view is the “global workspace” hypothesis, which holds that conscious states are mental states available for processing by a wide range of cognitive systems (Baars 1988, 1997; Dehaene & Naccache 2001). They are available in this way by being present in a special network—the “global workspace.” This workspace can be functionally characterized and it also can be given a neurological interpretation. In answer to the question “why are these states conscious?” it can be replied that this is what it means to be conscious. If a state is available to the mind in this way, it is a conscious state (see also Dennett 1991). (For more neuroscientifically-focused versions of the functionalist approach, see P.S Churchland 1986; Crick 1994; and Koch 2004.)

Another set of views that can be broadly termed functionalist is “enactive” or “embodied” approaches (Hurley 1998, Noë 2005, 2009). These views hold that mental processes should not be characterized in terms of strictly inner processes or representations. Rather, they should be cashed out in terms of the dynamic processes connecting perception, bodily and environmental awareness, and behavior. These processes, the views contend, do not strictly depend on processes inside the head; rather, they loop out into the body and the environment. Further, the nature of consciousness is tied up with behavior and action—it cannot be isolated as a passive process of receiving and recording information. These views are cataloged as functionalist because of the way they answer the hard problem: these physical states (constituted in part by bodily and worldly things) are conscious because they play the right functional role, they do the right thing.

Another strongly reductive approach holds that conscious states are states representing the world in the appropriate way (Dretske 1995, Tye 1995, 2000). This view, known as “first-order representationalism,” contends that conscious states make us aware of things in world by representing them. Further, these representations are “nonconceptual” in nature: they represent features even if the subject in question lacks the concepts needed to cognitively categorize those features. But these nonconceptual representations must play the right functional role in order to be conscious. They must be poised to influence the higher-level cognitive systems of a subject. The details of these representations differ from theorist to theorist, but a common answer to the hard problem emerges. First-order representational states are conscious because they do the right thing: they make us aware of just the sorts of features that make up conscious experience, features like the redness of an apple, the sweetness of honey, or the shrillness of a trumpet. Further, such representations are conscious because they are poised to play the right role in our understanding of the world—they serve as the initial layer of our epistemic contact with reality, a layer we can then use as the basis of our more sophisticated beliefs and theories.

A further point serves to support the claims of first-order representationalism. When we reflect on our experience in a focused way, we do not seem to find any distinctively mental properties. Rather, we find the very things first-order representationalism claims we represent: the basic sensory features of the world. If I ask you to reflect closely on your experience of a tree, you do not find special mental qualities. Rather, you find the tree, as it appears to you, as you represent it. This consideration, known as “transparency,” seems to undermine the claim that we need to posit special intrinsic qualia, seemingly irreducible properties of our experiences (Harman 1990, though see Kind 2003). Instead, we can explain all that we experience in terms of representation. We have a red experience because we represent physical red in the right way. It is then argued that representation can be given a reductive explanation. Representation, even the sort of representation involved in experience, is no more than various functional/physical processes of our brains tracking the environment. It follows that there is no further hard problem to deal with.

A third type of strongly reductive approach is higher-order representationalism (Armstrong 1968, 1981; Rosenthal 1986, 2005; Lycan 1987, 1996, 2001; Carruthers 2000, 2005). This view starts with the question of what accounts for the difference between conscious and nonconscious mental states. Higher-order theorists hold that an intuitive answer is that we are appropriately aware ofour conscious states, while we are unaware of our nonconscious states. The task of a theory of consciousness, then, is to explain the awareness accounting for this difference. Higher-order representationalists contend that the awareness is a product of a specific sort of representation, a representation that picks out the subject’s own mental states. These “higher-order” representations (representations of other representations) make the subject aware of her states, thus accounting for consciousness. In answer to the hard problem, the higher-order theorist responds that these states are conscious because the subject is appropriately aware of them by way of higher-order representation. The higher-order representations themselves are held to be nonconscious. And since representation can plausibly be reduced to functional/physical processes, there is no lingering problem to explain (though see Gennaro 2005 for more on this strategy).

A final strongly reductive view, “self-representationalism,” holds that troubles with the higher-order view demand that we characterize the awareness subjects have of their conscious states as a kind of self-representation, where one complex representational state is about both the world and that very state itself (Gennaro 1996, Kriegel 2003, 2009, Van Gulick 2004, 2006, Williford 2006). It may seem paradoxical to say that a state can represent itself, but this can dealt with by holding that the state represents itself in virtue of one part of the state representing another, and thereby coming to indirectly represent the whole. Further, self-representationalism may provide the best explanation of the seemingly ubiquitous presence of self-awareness in conscious experience. And, again, in answer to the question of why such states are conscious, the self-representationalist can respond that conscious states are ones the subject is aware of, and self-representationalism explains this awareness. And since self-representation, properly construed, is reducible to functional/physical processes, we are left with a complete explanation of consciousness. (For more details on how higher-order/self-representational views deal with the hard problem, see Gennaro 2012, chapter 4.)

However, there remains considerable resistance to strongly reductive views. The main stumbling block is that they seem to leave unaddressed the pressing intuition that one can easily conceive of a system satisfying all the requirements of the strongly reductive views but still lacking consciousness (Chalmers 1996, chapter 3). It is argued that an effective theory ought to close off such easy conceptions. Further, strong reductivists seem committed to the claim that there is no knowledge of consciousness that cannot be grasped theoretically. If a strongly reductive view is true, it seems that a blind person can gain full knowledge of color experience from a textbook. But surely she still lacks some knowledge of what it’s like to see red, for example? Strongly reductive theorists can contend that these recalcitrant intuitions are merely a product of lingering confused or erroneous views of consciousness. But in the face of such worries, many have felt it better to find a way to respect these intuitions while still denying the potentially unpleasant ontological implications of the hard problem. Hence, weak reductionism.

c. Weak Reductionism

Weak reductionism, in contrast to the strong version, holds that consciousness is a simple or basic phenomenon, one that cannot be informatively broken down into simpler nonconscious elements. But according to the view we can still identify consciousness with physical properties if the most parsimonious and productive theory supports such an identity (Block 2002, Block & Stalnaker 1999, Hill 1997, Loar 1997, 1999, Papineau 1993, 2002, Perry 2001). What’s more, once the identity has been established, there is no further burden of explanation. Identities have no explanation: a thing just is what it is. To ask how it could be that Mark Twain is Sam Clemens, once we have the most parsimonious rendering of the facts, is to go beyond meaningful questioning. And the same holds for the identity of conscious states with physical states.

But there remains the question of why the identity claim appears so counterintuitive and here weak reductionists generally appeal to the “phenomenal concepts strategy” (PCS) to make their case (compare Stoljar 2005). The PCS holds that the hard problem is not the result of a dualism of facts, phenomenal and physical, but rather a dualism of concepts picking out fully physical conscious states. One concept is the third-personal physical concept of neuroscience. The other concept is a distinctively first-personal “phenomenal concept”—one that picks out conscious states in a subjectively direct manner. Because of the subjective differences in these modes of conceptual access, consciousness does not seem intuitively to be physical. But once we understand the differences in the two concepts, there is no need to accept this intuition.

Here is a sketch of how a weakly reductive view of consciousness might proceed. First, we find stimuli that reliably trigger reports of phenomenally conscious states from subjects. Then we find what neural processes are reliably correlated with those reported experiences. It can then be argued on the basis of parsimony that the reported conscious state just is the neural state—an ontology holding that two states are present is less simple than one identifying the two states. Further, accepting the identity is explanatorily fruitful, particularly with respect to mental causation. Finally, the PCS is appealed to in order to explain why the identity remains counterintuitive. And as to the question of why this particular neural state should be identical to this particular phenomenal state, the answer is that this is just the way things are. Explanation bottoms out at this point and requests for further explanation are unreasonable.

But there are pressing worries about weak reductionism. There seems to be an undischarged phenomenal element within the weakly reductive view (Chalmers 2006). When we focus on the PCS, it seems that we lack a plausible story about how it is that phenomenal concepts reveal what it’s like for us in experience. The direct access of phenomenal concepts seems to require that phenomenal states themselves inform us of what they are like. A common way to cash out the PCS is to say that the phenomenal properties themselves are embedded in the phenomenal concepts, and that alone makes them accessible in the seemingly rich manner of introspected experience. When it is asked how phenomenal properties might underwrite this access, the answer given is that this is in the nature of phenomenal properties—that is just what they do. Again, we are told that explanation must stop somewhere. But at this point, it seems that there is little to distinguish that weak reductionist from the various forms of nonreductive and dualistic views cataloged below. They, too, hold that it is in the nature of phenomenal properties to underwrite first-person access. But they hold that there is no good reason to think that properties with this sort of nature are physical. We know of no other physical property that possesses such a nature. All that we are left with to recommend weak reductionism is a thin claim of parsimony and an overly-strong fealty to physicalism. We are asked to accept a brute identity here, one that seems unprecedented in our ontology given that consciousness is a macro-level phenomenon. Other examples of such brute identity—of electricity and magnetism into one force, say—occur at the foundational level of physics. Neurological and phenomenal properties do not seem to be basic in this way. We are left with phenomenal properties inexplicable in physical terms, “brutally” identified with neurological properties in a way that nothing else seems to be. Why not take all this as an indication that phenomenal properties are not physical after all?

The weak reductionist can respond that the question of mental causation still provides a strong enough reason to hold onto physicalism. A plausible scientific principal is that the physical world is causally closed: all physical events have physical causes. And since our bodies are physical, it seems that denying that consciousness is physical renders it epiphenomenal. The apparent implausibility of epiphenomenalism may be enough to motivate adherence to weak reductionism, even with its explanatory short-comings. Dualistic challenges to this claim will be discussed in later sections.

It is possible, however, to embrace weak reductionism and still acknowledge that some questions remain to be answered. For example, it might be reasonable to demand some explanation of how particular neural states correlate with differences in conscious experience. A weak reductionist might hold that this is a question we at present cannot answer. It may be that one day we will be in a position to so, due to a radical shift in our understanding of consciousness or physical reality. Or perhaps this will remain an unsolvable mystery, one beyond our limited abilities to decipher. Still, there may be good reasons to hold at present that the most parsimonious metaphysical picture is the physicalist picture. The line between weak reductionism and the next set of views to be considered, mysterianism, may blur considerably here.

d. Mysterianism

The mysterian response to the hard problem does not offer a solution; rather, it holds that the hard problem cannot be solved by current scientific method and perhaps cannot be solved by human beings at all. There are two varieties of the view. The more moderate version of the position, which can be termed “temporary mysterianism,” holds that given the current state of scientific knowledge, we have no explanation of why some physical states are conscious (Nagel 1974, Levine 2001). The gap between experience and the sorts of things dealt with in modern physics—functional, structural, and dynamical properties of basic fields and particles—is simply too wide to be bridged at present. Still, it may be that some future conceptual revolution in the sciences will show how to close the gap. Such massive conceptual reordering is certainly possible, given the history of science. And, indeed, if one accepts the Kuhnian idea of shifts between incommensurate paradigms, it might seem unsurprising that we, pre-paradigm-shift, cannot grasp what things will be like after the revolution. But at present we have no idea how the hard problem might be solved.

Thomas Nagel, in sketching his version of this idea, calls for a future “objective phenomenology” which will “describe, at least in part, the subjective character of experiences in a form comprehensible to beings incapable of having those experiences” (1974, 449). Without such a new conceptual system, Nagel holds, we are left unable to bridge the gap between consciousness and the physical. Consciousness may indeed be a physical, but we at present have no idea how this could be so.

It is of course open for both weak and strong reductionists to accept a version of temporary mysterianism. They can agree that at present we do not know how consciousness fits into the physical world, but the possibility is open that future science will clear up the mystery. The main difference between such claims by reductionists and by mysterians is that the mysterians reject the idea that current reductive proposals do anything at all to close the gap. How different the explanatory structure must be to count as truly new and not merely an extension of the old is not possible to gauge with any precision. So the difference between a very weak reductionist and a temporary, though optimistic mysterian may not amount to much.

The stronger version of the position, “permanent mysterianism,” argues that our ignorance in the face of the hard problem is not merely transitory, but is permanent, given our limited cognitive capacities (McGinn 1989, 1991). We are like squirrels trying to understand quantum mechanics: it just is not going to happen. The main exponent of this view is Colin McGinn, who argues that a solution to the hard problem is “cognitively closed” to us. He supports his position by stressing consequences of a modular view of the mind, inspired in part by Chomsky’s work in linguistics. Our mind just may not be built to solve this sort of problem. Instead, it may be composed of dedicated, domain-specific “modules” devoted to solving local, specific problems for an organism. An organism without a dedicated “language acquisition device” equipped with “universal grammar” cannot acquire language. Perhaps the hard problem requires cognitive apparatus we just do not possess as a species. If that is the case, no further scientific or philosophical breakthrough will make a difference. We are not built to solve the problem: it is cognitively closed to us.

A worry about such a claim is that it is hard to establish just what sorts of problems are permanently beyond our ken. It seems possible that the temporary mysterian may be correct here, and what looks unbridgeable in principle is really just a temporary roadblock. Both the temporary and permanent mysterian agree on the evidence. They agree that there is a real gap at present between consciousness and the physical and they agree that nothing in current science seems up to the task of solving the problem. The further claim that we are forever blocked from solving the problem turns on controversial claims about the nature of the problem and the nature of our cognitive capacities. Perhaps those controversial claims will be made good, but at present, it is hard to see why we should give up all hope, given the history of surprising scientific breakthroughs.

e. Interactionist Dualism

Perhaps, though, we know enough already to establish that consciousness is not a physical phenomenon. This brings us to what has been, historically speaking, the most important response to the hard problem and the more general mind-body problem: dualism, the claim that consciousness is ontologically distinct from anything physical. Dualism, in its various forms, reasons from the explanatory, epistemological, or conceptual gaps between the phenomenal and the physical to the metaphysical conclusion that the physicalist worldview is incomplete and needs to be supplemented by the addition of irreducibly phenomenal substance or properties.

Dualism can be unpacked in a number of ways. Substance dualism holds that consciousness makes up a distinct fundamental “stuff” which can exist independently of any physical substance. Descartes’ famous dualism was of this kind (Descartes 1640/1984). A more popular modern dualist option is property dualism, which holds that the conscious mind is not a separate substance from the physical brain, but that phenomenal properties are nonphysical properties of the brain. On this view, it is metaphysically possible that the physical substrate occurs without the phenomenal properties, indicating their ontological independence, but phenomenal properties cannot exist on their own. The properties might emerge from some combination of nonphenomenal properties (emergent dualism—compare Broad 1925) or they might be present as a fundamental feature of reality, one that necessarily correlates with physical matter in our world, but could in principle come apart from the physical in another possible world.

A key question for dualist views concerns the relationship between consciousness and the physical world, particularly our physical bodies. Descartes held that conscious mental properties can have a causal impact upon physical matter—this is known as interactionist dualism. Recent defenders of interactionist dualism include Foster 1991, Hodgson 1991, Lowe 1996, Popper and Eccles 1977, H. Robinson 1982, Stapp 1993, and Swinburne 1986. However, interactionist dualism requires rejecting the “causal closure” of the physical domain, the claim that every physical event is fully determined by a physical cause. Causal closure is a long-held principle in the sciences, so its rejection marks a strong break from current scientific orthodoxy (though see Collins 2011). Another species of dualism accepts the causal closure of physics, but still holds that phenomenal properties are metaphysically distinct from physical properties. This compatibilism is achieved at the price of consciousness epiphenomenalism, the view that conscious properties can be caused by physical events, but they cannot in turn cause physical events. I will discuss interactionist dualism in this section, including a consideration of how quantum mechanics might open up a workable space for an acceptable dualist interactionist view. I will discuss epiphenomenalism in the following section.

Interactionist dualism, of both the substance and property type, holds that consciousness is causally efficacious in the production of bodily behavior. This is certainly a strongly intuitive position to take with regard to mental causation, but it requires rejecting the causal closure of the physical. It is widely thought that the principle of causal closure is central to modern science, on par with basic conservation principles like the conservation of energy or matter in a physical reaction (see, for example, Kim 1998). And at macroscopic scales, the principle appears well-supported by empirical evidence. However, at the quantum level it is more plausible to question causal closure. On one reading of quantum mechanics, the progression of quantum-level events unfolds in a deterministic progression until an observation occurs. At that point, some views hold that the progression of events becomes indeterminstic. If so, there may be room for consciousness to influence how such “decoherence” occurs—that is, how the quantum “wave function” collapses into the classical, observable macroscopic world we experience. How such a process occurs is the subject of speculative theorizing in quantum theories of consciousness. It may be that such views are better cataloged as physicalist: the properties involved might well be labeled as physical in a completed science (see, for example, Penrose 1989, 1994; Hameroff 1998). If so, the quantum view is better seen as strongly or weakly reductive.

Still, it might be that the proper cashing out of the idea of “observation” in quantum theory requires positing consciousness as an unreduced primitive. Observation may require something intrinsically conscious, rather than something characterized in the relational terms of physical theory. In that case, phenomenal properties would be metaphysically distinct from the physical, traditionally characterized, while playing a key role in physical theory—the role of collapsing the wave function by observation. Thus, there seems to be theoretical space for a dualist view which rejects closure but maintains a concordance with basic physical theory.

Sill, such views face considerable challenges. They are beholden to particular interpretations of quantum mechanics and this is far from a settled field, to put it mildly. It may well be that the best interpretation of quantum mechanics rejects the key assumption of indeterminacy here (see Albert 1993 for the details of this debate). Further, the kinds of indeterminacies discoverable at the quantum level may not correspond in any useful way to our ordinary idea of mental causes. The pattern of decoherence may have little to do with my conscious desire to grab a beer causing me to go to the fridge. Finally, there is the question of how phenomenal properties at the quantum level come together to make up the conscious experience we have. Our conscious mental lives are not themselves quantum phenomenon—how, then, do micro-phenomenal quantum-level properties combine to constitute our experiences? Still, this is an alluring area of investigation, bringing together the mysteries of consciousness and quantum mechanics. But such a mix may only compound our explanatory troubles!

f. Epiphenomenalism

A different dualistic approach accepts the causal closure of physics by holding that phenomenal properties have no causal influence on the physical world (Campbell 1970, Huxley 1874, Jackson 1982, and W.S. Robinson 1988, 2004). Thus, any physical effect, like a bodily behavior, will have a fully physical cause. Phenomenal properties merely accompany causally efficacious physical properties, but they are not involved in making the behavior happen. Phenomenal properties, on this view, may be lawfully correlated with physical properties, thus assuring that whenever a brain event of a particular type occurs, a phenomenal property of a particular type occurs. For example, it may be that bodily damage causes activity in the amygdala, which in turn causes pain-appropriate behavior like screaming or cringing. The activity in the amygdala will also cause the tokening of phenomenal pain properties. But these properties are out of the causal chain leading to the behavior. They are like the activity of a steam whistle relative to the causal power of the steam engine moving a train’s wheels.

Such a view has no obvious logical flaw, but it is in strong conflict with our ordinary notions of how conscious states are related to behavior. It is extremely intuitive that our pains at times cause us to scream or cringe. But on the epiphenomenalist view, that cannot be the case. What’s more, our knowledge of our conscious states cannot be caused by the phenomenal qualities of our experiences. On the epiphenomenalist view, my knowledge that I’m in pain is not caused by the pain itself. This, too, seems absurd: surely, the feeling of pain is causally implicated in my knowledge of that pain! But the epiphenomenalist can simply bite the bullet here and reject the commonsense picture. We often discover odd things when we engage in serious investigation, and this may be one of them. Denying commonsense intuition is better than denying a basic scientific principle like causal closure, according to epiphenomenalists. And it may be that experimental results in the sciences undermine the causal efficacy of consciousness as well, so this is not so outrageous a claim (See Libet, 2004; Wegner 2002, for example). Further, the epiphenomenalist can deny that we need a causal theory of first-person knowledge. It may be that our knowledge of our conscious states is achieved by a unique kind of noncausal acquaintance. Or maybe merely having the phenomenal states is enough for us to know of them—our knowledge of consciousness may be constituted by phenomenal states, rather than caused by them. Knowledge of causation is a difficult philosophical area in general, so it may reasonable to offer alternatives to the causal theory in this context. But despite these possibilities, epiphenomenalism remains a difficult view to embrace because of its strongly counterintuitive nature.

g. Dual Aspect Theory/Neutral Monism/Panpsychism

A final set of views, close in spirit to dualism, hold that phenomenal properties cannot be reduced to more basic physical properties, but might reduce to something more basic still, a substance that is both physical and phenomenal or that underwrites both. Defenders of such views agree with dualists that the hard problem forces a rethinking of our basic ontology, but they disagree that this entails dualism. There are several variations of the idea. It may be that there is a more basic substance underlying all physical matter and this basic substance possesses phenomenal as well as physical properties (dual aspect theory: Spinoza 1677/2005, P. Strawson 1959, Nagel 1986). Or it may be that this more basic substance is “neutral”—neither phenomenal nor physical, yet somehow underlying both (neutral monism: Russell 1926, Feigl 1958, Maxwell 1979, Lockwood 1989, Stubenberg 1998, Stoljar 2001, G. Strawson 2008). Or it may be that phenomenal properties are the intrinsic categorical bases for the relational, dispositional properties described in physics and so everything physical has an underlying phenomenal nature (panpsychism: Leibniz 1714/1989, Whitehead 1929, Griffin 1998, Rosenberg 2005, Skrbina 2007). These views have all received detailed elaboration in past eras of philosophy, but they have seen a distinct revival as responses to the hard problem.

There is considerable variation in how theorists unpack these kinds of views, so it is only possible here to give generic versions of the ideas. All three views make consciousness more basic or as basic as physical properties; this is something they share with dualism. But they disagree about the right way to spell out the metaphysical relations between the phenomenal, the physical, and any more basic substance there might be. The true differences between the views are not always clear even to the views’ defenders, but we can try to tease them apart here.

A dual-aspect view holds that there is one basic underlying stuff that possesses both physical and phenomenal properties. These properties may only be instantiated when the right combinations of the basic substance are present, so panpsychism is not a necessary entailment of the view. For example, when the basic substance is configured in the form of a brain, it then realizes phenomenal as well as physical properties. But that need not be the case when the fundamental stuff makes up a table. But in any event, phenomenal properties are not themselves reducible to physical properties. There is a fine line between such views and dualist views, mainly turning on the difference between constitution and lawful correlation.

Neutral monist views hold that there is a more basic neutral substance underlying both the phenomenal and the physical. ‘Neutral’ here means that the underlying stuff really is neither phenomenal nor physical, so there is a good sense in which such a position is reductive: it explains the presence of the phenomenal by reference to something else more basic. This distinguishes it from the dual-aspect approach—on the dual-aspect view, the underlying stuff already possesses phenomenal (and physical) properties, while on neutral monism it does not. That leaves neutral monism with the challenge of explaining this reductive relationship, as well as explaining how the neutral substance underlies physical reality without itself being physical.

Panpsychism holds that the phenomenal is basic to all matter. Such views hold that the phenomenal somehow underwrites the physical or is potentially present at all times as a property of a more basic substance. This view must explain what it means to say that everything is conscious in some sense. Further, it must explain how it is that the basic phenomenal (or “protophenomenal”) elements combine to form the sorts of properties we are acquainted with in consciousness. Why is it that some combinations form the experiences we enjoy and others (presumably) do not?

One line of support for these types of views comes from the way that physical theory defines its basic properties in terms of their dispositions to causally interact with each other. For example, what it is to be a quark of a certain type is just to be disposed to behave in certain ways in the presence of other quarks. Physical theory is silent about what stuff might underlie or constitute the entities with these dispositions—it deals only in extrinsic or relational properties, not in intrinsic properties. At the same time, there is reason to hold that consciousness possesses nonrelational intrinsic qualities. Indeed, this may explain why we cannot know what it’s like to be a bat—that requires knowledge of an intrinsic quality not conveyable by relational description. Putting these two ideas together, we find a motivation for the sorts of views canvassed here. Basic physics is silent about the intrinsic categorical bases underlying the dispositional properties described in physical theory. But it seems plausible that there must be such bases—how could there be dispositions to behave thus-and-so without some categorical base to ground the disposition? And since we already have reason to believe that conscious qualities are intrinsic, it makes sense to posit phenomenal properties as the categorical bases of basic physical matter. Or we can posit a neutral substance to fill this role, one also realizing phenomenal properties when in the right circumstances.

These views all seem to avoid epiphenomenalism. Whenever there is a physical cause of behavior, the underlying phenomenal (or neutral) basis will be present to do the work. But that cause might itself be constituted by the phenomenal, in the senses laid out here. What’s more, there is nothing in conflict with physics—the properties posited appear at a level below the range of relational physical description. And they do not conflict with or preempt anything present in physical theory.

But we are left with several worries. First, it is again the case that phenomenal properties are posited at an extreme micro-level. How it is that such micro-phenomenal properties cohere into the sorts of experiential properties present in consciousness is unexplained. What’s more, if we take the panpsychic route, we are faced with the claim that every physical object has a phenomenal nature of some kind. This may not be incoherent, but it is a counterintuitive result. But if we do not accept panpsychism, we must explain how the more basic underlying substance differs from the phenomenal and yet instantiates it in the right circumstances. Simply saying that this just is the nature of the neutral substance is not an informative answer. Finally, it is unclear how these views really differ from a weakly reductionist account. Both hold that there is a basic and brute connection between the physical brain and phenomenal consciousness. On the weakly reductionist account, the connection is one of brute identity. On the dual-aspect/neutral monist/panpsychic account, it is one of brute constitution, where two properties, the physical and the phenomenal, constantly co-occur (because the one constitute the categorical base of the other, or they are aspects of a more basic stuff, etc.), though they are held to be metaphysically distinct. Is there any evidence that could decide between the views? The apparent differences here may be more one of style than of substance, despite the intricacies of these metaphysical debates.

4. References and Further Reading

- Albert, D. Z. Quantum Mechanics and Experience. Cambridge, MA: Harvard University Press, 1993.

- Armstrong, D. A Materialist Theory of Mind. London: Routledge and Kegan Paul, 1968.

- Armstrong, D. “What is Consciousness?” In The Nature of Mind. Ithaca, NY: Cornell University Press, 1981.

- Baars, B. A Cognitive Theory of Consciousness. Cambridge: Cambridge University Press, 1988.

- Baars, B. In The Theater of Consciousness. New York: Oxford University Press, 1997.

- Block, N. “Are Absent Qualia Impossible?” Philosophical Review 89: 257-74, 1980.

- Block, N. “On a Confusion about the Function of Consciousness.” Behavioral and Brain Sciences 18: 227-47, 1995.

- Block, N. “The Harder Problem of Consciousness.” The Journal of Philosophy, XCIX, 8, 391-425, 2002.

- Block, N. & Stalnaker, R. “Conceptual Analysis, Dualism, and the Explanatory Gap.” Philosophical Review 108: 1-46, 1999.

- Broad, C.D. The Mind and its Place in Nature. Routledge and Kegan Paul, London, 1925.

- Campbell, K. K. Body and Mind. London: Doubleday, 1970.

- Carruthers, P. Phenomenal Consciousness. Cambridge, MA: Cambridge University Press, 2000.

- Carruthers, P. Consciousness: Essays from a Higher-Order Perspective. New York: Oxford University Press, 2005.

- Chalmers, D.J. “Facing up to the Problem of Consciousness.” In Journal of Consciousness Studies 2: 200-19, 1995.

- Chalmers, D.J. The Conscious Mind: In Search of a Fundamental Theory. Oxford: Oxford University Press, 1996.

- Chalmers, D.J. “Phenomenal Concepts and the Explanatory Gap.” In T. Alter & S. Walter, eds. Phenomenal Concepts and Phenomenal Knowledge: New Essays on Consciousness and Physicalism Oxford: Oxford University Press, 2006.

- Churchland, P.M. “Eliminative Materialism and the Propositional Attitudes.” Journal of Philosophy, 78, 2, 1981.

- Churchland, P. M. “Reduction, qualia, and the direct introspection of brain states.” Journal of Philosophy, 82, 8–28, 1985.

- Churchland, P. S. Neurophilosophy. Cambridge, MA: MIT Press, 1986.

- Collins, R. “Energy of the soul.” In M.C. Baker & S. Goetz eds. The Soul Hypothesis, London: Continuum, 2011.

- Crane, T. “The origins of qualia.” In T. Crane & S. Patterson, eds. The History of the Mind-Body Problem. London: Routledge, 2000.

- Crick, F. H. The Astonishing Hypothesis: The Scientific Search for the Soul. New York: Scribners, 1994.

- Dehaene, S. & Naccache, L. “Towards a cognitive neuroscience of consciousness: basic evidence and a workspace framework.” Cognition 79, 1-37, 2001.

- Dennett, D.C. “Why You Can’t Make a Computer that Feels Pain.” Synthese 38, 415-456, 1978.

- Dennett, D.C. “Quining Qualia.” In A. Marcel & E. Bisiach eds. Consciousness and Contemporary Science. New York: Oxford University Press, 1988.

- Dennett, D.C. Consciousness Explained. Boston: Little, Brown, and Co, 1991.

- Descartes, R. Meditations on first philosophy. In J. Cottingham, R. Stoothoff, & D. Murdoch, Trans. The philosophical writings of Descartes: Vol. 2, Cambridge: Cambridge University Press, 1-50, 1640/1984.

- Dretske, F. Naturalizing the Mind. Cambridge, MA: MIT Press, 1995.

- Farrell, B.A. “Experience.” Mind 59 (April):170-98, 1950.

- Feigl, H. “The ‘Mental’ and the ‘Physical.’” In H. Feigl, M. Scriven & G. Maxwell, eds. Minnesota Studies in the Philosophy of Science. Minneapolis: University of Minnesota Press, 1958.

- Foster, J. The Immaterial Self: A Defence of the Cartesian Dualist Conception of Mind. London: Routledge, 1991.

- Gennaro, R.J. Consciousness and Self-consciousness: A Defense of the Higher-Order Thought Theory of Consciousness. Amsterdam & Philadelphia: John Benjamins, 1996.

- Gennaro, R.J. The HOT theory of consciousness: Between a rock and a hard place? Journal of Consciousness Studies 12 ( 2 ): 3 – 21, 2005..

- Gennaro, R.J. The Consciousness Paradox. Cambridge, MA: MIT Press, 2012..

- Griffin, D. R. Unsnarling the World-Knot: Consciousness, Freedom, and the Mind Body Problem. Berkeley: University of California Press, 1998.

- Hameroff, S. “Quantum Computation in Brain Microtubules? The Penrose-Hameroff “Orch OR” Model of Consciousness.” In Philosophical Transactions Royal Society London A 356:1869-96, 1998.

- Harman, G. “The Intrinsic Quality of Experience.” In J. Tomberlin, ed. Philosophical Perspectives, 4. Atascadero, CA: Ridgeview Publishing, 1990.

- Hill, C. S. “Imaginability, conceivability, possibility, and the mind-body problem.”

- Philosophical Studies 87: 61-85, 1997.

- Hodgson, D. The Mind Matters: Consciousness and Choice in a Quantum World. Oxford: Oxford University Press, 1991.

- Hurley, S. Consciousness in Action. Cambridge, MA: Harvard University Press, 1998.

- Huxley, T. “On the hypothesis that animals are automata, and its history.” Fortnightly Review 95: 555-80, 1874.

- Jackson, F. “Epiphenomenal Qualia.” In Philosophical Quarterly 32: 127-136, 1982.

- Jackson, F. “What Mary didn’t Know.” In Journal of Philosophy 83: 291-5, 1986.

- Kim, J. Mind in Physical World. Cambridge: MIT Press, 1998.

- Kind, A. “What’s so Transparent about Transparency?” In Philosophical Studies 115: 225-244, 2003.

- Koch, C. The Quest for Consciousness: A Neurobiological Approach. Englewood, CO: Roberts and Company, 2004.

- Kriegel, U. “Consciousness as intransitive self-consciousness: Two views and an argument.” Canadian Journal of Philosophy, 33, 103–132, 2005.

- Kriegel, U. Subjective Consciousness: A Self-Representational Theory. Oxford: Oxford University Press, 2009.

- Leibniz, G. Monadology. In G. W. Leibniz: Philosophical Essays, R. Ariew & D. Garber eds. and trans., Indianapolis: Hackett Publishing Company, 1714/1989.

- Levine, J. “Materialism and Qualia: the Explanatory Gap.” In Pacific Philosophical Quarterly 64,354-361, 1983.

- Levine, J. “On Leaving out what it’s like.” In M. Davies and G. Humphreys, eds. Consciousness: Psychological and Philosophical Essays. Oxford: Blackwell, 1993.

- Levine, J. Purple Haze: The Puzzle of Conscious Experience. Cambridge, MA: MIT Press, 2001.

- Lewis, C.I. Mind and the World Order. London: Constable, 1929.

- Lewis, D.K. “Psychophysical and Theoretical Identifications.” Australasian Journal of Philosophy. L, 3, 249-258, 1972.

- Libet, B. Mind Time: The Temporal Factor in Consciousness. Cambridge, MA: Harvard University Press, 2004.

- Loar, B. “Phenomenal States”. In N. Block, O. Flanagan, and G. Güzeldere eds. The Nature of Consciousness.Cambridge, MA: MIT Press, 1997.

- Loar, B. “David Chalmers’s The Conscious Mind.” Philosophy and Phenomenological Research 59: 465-72, 1999.

- Lockwood, M. Mind, Brain and the Quantum. The Compound ‘I’. Oxford: Basil Blackwell, 1989.

- Lowe, E.J. Subjects of Experience. Cambridge: Cambridge University Press, 1996.

- Lycan, W.G. Consciousness. Cambridge, MA: MIT Press, 1987.

- Lycan, W.G. Consciousness and Experience. Cambridge, MA: MIT Press, 1996.

- Lycan, W.G. “A Simple Argument for a Higher-Order Representation Theory of Consciousness.” Analysis 61: 3-4, 2001.

- Maxwell, G. “Rigid designators and mind-brain identity.” Minnesota Studies in the Philosophy of Science 9: 365-403, 1979.

- McGinn, C. “Can we solve the Mind-Body Problem?” In Mind 98:349-66, 1989.

- McGinn, C. The Problem of Consciousness. Oxford: Blackwell, 1991.

- Nagel, T. “What is it like to be a Bat?” In Philosophical Review 83: 435-456, 1974.

- Nagle, T. The View from Nowhere. Oxford University Press, 1986.

- Noë, A. Action in Perception. Cambridge, MA; The MIT Press, 2005.

- Noë, A. Out of Our Heads: Why You Are Not Your Brain, and Other Lessons from the Biology of Consciousness. New York: Hill & Wang, 2009.

- Papineau, D. “Physicalism, consciousness, and the antipathetic fallacy.” Australasian Journal of Philosophy 71, 169-83, 1993.

- Papineau, D. Thinking about Consciousness. Oxford: Oxford University Press, 2002.

- Perry, J. Knowledge, Possibility, and Consciousness. Cambridge, MA: MIT Press, 2001.

- Penrose, R. The Emperor’s New Mind: Computers, Minds and the Laws of Physics. Oxford: Oxford University Press, 1989.

- Penrose, R. Shadows of the Mind. Oxford: Oxford University Press, 1994.

- Popper, K. & Eccles, J. The Self and Its Brain: An Argument for Interactionism. Berlin, Heidelberg: Springer, 1977.

- Quine, W.V.O. “Two Dogmas of Empiricism.” Philosophical Review, 60: 20-43, 1951.

- Rey, G. “A Question About Consciousness.” In N. Block, O. Flanagan, and G. Güzeldere eds. The Nature of Consciousness. Cambridge, MA: MIT Press, 461-482, 1997.

- Robinson, H. Matter and Sense, Cambridge: Cambridge University Press, 1982.

- Robinson, W. S. Brains and People: An Essay on Mentality and its Causal Conditions. Philadelphia: Temple University Press, 1988.

- Robinson, W.S. Understanding Phenomenal Consciousness. New York : Cambridge University Press, 2004.

- Rosenberg, G. A Place for Consciousness: Probing the Deep Structure of the Natural World. Oxford: Oxford University Press, 2005.

- Rosenthal, D. M. “Two Concepts of Consciousness.” In Philosophical Studies 49:329-59, 1986.

- Rosenthal, D.M. Consciousness and Mind. Oxford: Clarendon Press, 2005.

- Russell, B. The Analysis of Matter. London: Kegan Paul, 1927.

- Ryle, G. The Concept of Mind. London: Hutchinson, 1949.

- Shear, J. ed. Explaining Consciousness: The Hard Problem. Cambridge, MA: MIT Press, 1997.

- Skrbina, D. Panpsychism in the West. Cambridge MA: MIT/Bradford Books, 2007.

- Spinoza, B. Ethics. E. Curley, trans. New York: Penguin, 1677/2005.

- Stapp, H. Mind, Matter, and Quantum Mechanics. Berlin: Springer-Verlag, 1993.

- Stoljar, D. “Two Conceptions of the Physical.” Philosophy and Phenomenological Research, 62: 253–281, 2001.

- Stoljar, D. “Physicalism and phenomenal concepts.” Mind and Language 20, 5, 469–494, 2005.

- Strawson, G. Real Materialism and Other Essays. Oxford: Oxford University Press, 2008.

- Strawson, P. Individuals. An Essay in Descriptive Metaphysics. London: Methuen, 1959.

- Stubenberg, L. Consciousness and Qualia. Philadelphia & Amsterdam: John Benjamins Publishers, 1998.

- Swinburne, R. The Evolution of the Soul. Oxford: Oxford University Press, 1986.

- Tye, M. Ten Problems of Consciousness. Cambridge, MA: MIT Press, 1995.

- Tye, M. Consciousness, Color, and Content. Cambridge, MA: MIT Press, 2000.

- Van Gulick, R. “Higher-Order Global States HOGS: An Alternative Higher-Order Model of Consciousness.” In R. Gennaro ed. Higher-Order Theories of Consciousness: An Anthology. Amsterdam and Philadelphia: John Benjamins, 2004.

- Van Gulick, R. “Mirror Mirror – is that all?” In U. Kriegel & K. Williford Self-Representational Approaches to Consciousness. Cambridge, MA: MIT Press, 2006.

- Wegner, D. The Illusion of Conscious Will. Cambridge, MA: MIT Press, 2002.

- Whitehead, A.N. Process and Reality: an Essay in Cosmology, New York: Macmillan, 1929.

- Wilkes, K. V. “Is Consciousness Important?” In British Journal for the Philosophy of Science 35: 223-43, 1984.

- Williford, K. “The Self-Representational Structure of Consciousness.” In U. Kriegel & K. Williford, eds. Self-Representational Approaches to Consciousness. Cambridge, MA: MIT Press, 2006.

- Wittgenstein, L. Philosophical Investigations. Oxford: Blackwell, 1953.

Josh Weisberg. 2016. “Hard Problem of Consciousness | Internet Encyclopedia of Philosophy.” Accessed March 15. http://www.iep.utm.edu/hard-con/.